Time-series forecasting is a very powerful analysis space this is vital to a number of clinical and business programs, like retail provide chain optimization, power and site visitors prediction, and climate forecasting. In retail use situations, for instance, it’s been noticed that bettering call for forecasting accuracy can meaningfully scale back stock prices and build up earnings.

Fashionable time-series programs can contain forecasting masses of hundreds of correlated time-series (e.g., calls for of various merchandise for a store) over lengthy horizons (e.g., 1 / 4 or yr away at day by day granularity). As such, time-series forecasting fashions want to fulfill the next key criterias:

- Skill to maintain auxiliary options or covariates: Maximum use-cases can receive advantages greatly from successfully the usage of covariates, as an example, in retail forecasting, vacations and product explicit attributes or promotions can impact call for.

- Appropriate for various information modalities: It must be capable to maintain sparse rely information, e.g., intermittent call for for a product with low quantity of gross sales whilst additionally having the ability to type tough steady seasonal patterns in site visitors forecasting.

A lot of neural communityâfounded answers had been ready to turn excellent efficiency on benchmarks and likewise give a boost to the above criterion. Then again, those strategies are usually gradual to coach and can also be pricey for inference, particularly for longer horizons.

In âLengthy-term Forecasting with TiDE: Time-series Dense Encoderâ, we provide an all multilayer perceptron (MLP) encoder-decoder structure for time-series forecasting that achieves awesome efficiency on lengthy horizon time-series forecasting benchmarks when in comparison to transformer-based answers, whilst being 5â10x sooner. Then in âOn the advantages of most probability estimation for Regression and Forecastingâ, we display that the usage of a in moderation designed coaching loss serve as in keeping with most probability estimation (MLE) can also be efficient in dealing with other information modalities. Those two works are complementary and can also be implemented as part of the similar type. Actually, they’ll be to be had quickly in Google Cloud AIâs Vertex AutoML Forecasting.

TiDE: A easy MLP structure for speedy and correct forecasting

Deep finding out has proven promise in time-series forecasting, outperforming conventional statistical strategies, particularly for giant multivariate datasets. After the luck of transformers in herbal language processing (NLP), there were a number of works comparing variants of the Transformer structure for lengthy horizon (the period of time into the longer term) forecasting, equivalent to FEDformer and PatchTST. Then again, different paintings has advised that even linear fashions can outperform those transformer variants on time-series benchmarks. However, easy linear fashions don’t seem to be expressive sufficient to maintain auxiliary options (e.g., vacation options and promotions for retail call for forecasting) and non-linear dependencies at the previous.

We provide a scalable MLP-based encoder-decoder type for speedy and correct multi-step forecasting. Our type encodes the previous of a time-series and all to be had options the usage of an MLP encoder. Due to this fact, the encoding is blended with long term options the usage of an MLP decoder to yield long term predictions. The structure is illustrated under.

|

| TiDE type structure for multi-step forecasting. |

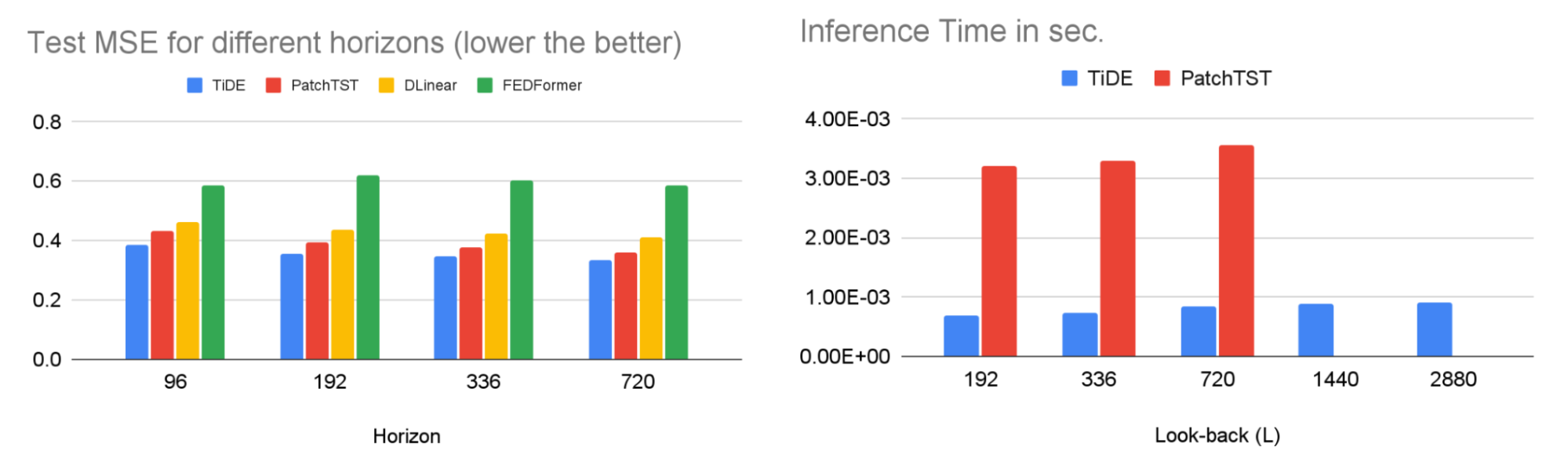

TiDE is greater than 10x sooner in coaching in comparison to transformer-based baselines whilst being extra correct on benchmarks. Identical beneficial properties can also be noticed in inference because it handiest scales linearly with the duration of the context (the selection of time-steps the type appears to be like again) and the prediction horizon. Beneath at the left, we display that our type can also be 10.6% higher than the most efficient transformer-based baseline (PatchTST) on a well-liked site visitors forecasting benchmark, with regards to check imply squared error (MSE). At the proper, we display that on the identical time our type will have a lot sooner inference latency than PatchTST.

|

| Left: MSE at the check set of a well-liked site visitors forecasting benchmark. Proper: inference time of TiDE and PatchTST as a serve as of the look-back duration. |

Our analysis demonstrates that we will profit from MLPâs linear computational scaling with look-back and horizon sizes with out sacrificing accuracy, whilst transformers scale quadratically on this state of affairs.

Probabilistic loss purposes

In maximum forecasting programs the top person is serious about well-liked goal metrics just like the imply absolute proportion error (MAPE), weighted absolute proportion error (WAPE), and many others. In such eventualities, the usual method is to make use of the similar goal metric because the loss serve as whilst coaching. In âOn the advantages of most probability estimation for Regression and Forecastingâ, accredited at ICLR, we display that this method would possibly no longer all the time be the most efficient. As an alternative, we suggest the usage of the utmost probability loss for a in moderation selected circle of relatives of distributions (mentioned extra under) that may seize inductive biases of the dataset all over coaching. In different phrases, as an alternative of without delay outputting level predictions that decrease the objective metric, the forecasting neural community predicts the parameters of a distribution within the selected circle of relatives that perfect explains the objective information. At inference time, we will expect the statistic from the discovered predictive distribution that minimizes the objective metric of passion (e.g., the imply minimizes the MSE goal metric whilst the median minimizes the WAPE). Additional, we will additionally simply download uncertainty estimates of our forecasts, i.e., we will supply quantile forecasts by means of estimating the quantiles of the predictive distribution. In different use situations, correct quantiles are important, as an example, in call for forecasting a store would possibly wish to inventory for the ninetieth percentile to protect towards worst-case eventualities and steer clear of misplaced earnings.

The selection of the distribution circle of relatives is the most important in such situations. For instance, within the context of sparse rely information, we would possibly wish to have a distribution circle of relatives that may put extra likelihood on 0, which is frequently referred to as zero-inflation. We advise a mix of other distributions with discovered combination weights that may adapt to other information modalities. Within the paper, we display that the usage of a mix of 0 and more than one unfavorable binomial distributions works neatly in a number of settings as it will possibly adapt to sparsity, more than one modalities, rely information, and information with sub-exponential tails.

|

| A mix of 0 and two unfavorable binomial distributions. The weights of the 3 elements, a1, a2 and a3, can also be discovered all over coaching. |

We use this loss serve as for coaching Vertex AutoML fashions at the M5 forecasting festival dataset and display that this straightforward exchange may end up in a 6% achieve and outperform different benchmarks within the festival metric, weighted root imply squared scaled error (WRMSSE).

| M5 Forecasting | WRMSSE |

| Vertex AutoML | 0.639 +/- 0.007 |

| Vertex AutoML with probabilistic loss      | 0.581 +/- 0.007 |

| DeepAR | 0.789 +/- 0.025 |

| FEDFormer | 0.804 +/- 0.033 |

Conclusion

We’ve got proven how TiDE, at the side of probabilistic loss purposes, permits rapid and correct forecasting that robotically adapts to other information distributions and modalities and likewise supplies uncertainty estimates for its predictions. It supplies state of the art accuracy amongst neural communityâfounded answers at a fragment of the price of earlier transformer-based forecasting architectures, for large-scale endeavor forecasting programs. We are hoping this paintings may also spur passion in revisiting (each theoretically and empirically) MLP-based deep time-series forecasting fashions.

Acknowledgements

This paintings is the results of a collaboration between a number of folks throughout Google Analysis and Google Cloud, together with (in alphabetical order): Pranjal Awasthi, Dawei Jia, Weihao Kong, Andrew Leach, Shaan Mathur, Petros Mol, Shuxin Nie, Ananda Theertha Suresh, and Rose Yu.